You might have seen newspaper headline this calendar week about the Samsung Galaxy S23 Ultra taking so - call “ fake ” moonlight pictures . Ever since the S20 Ultra , Samsung has had a feature article called Space Zoom that marries its 10X optical soar with monumental digital rapid climb to give a combined 100X zoom . In marketing shot , Samsung has demonstrate its sound pickings near - crystal clear pictures of the moon , and users have done the same on a clear dark .

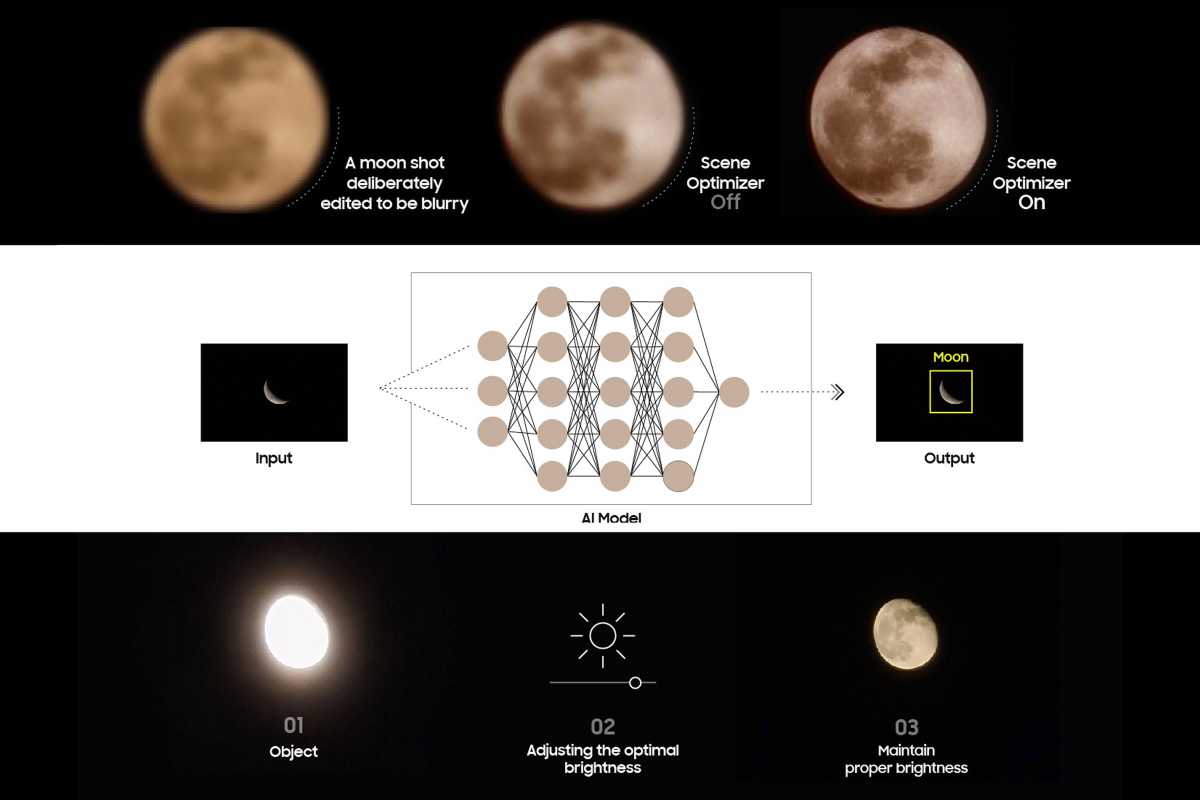

Buta Redditor has proventhat Samsung ’s incredible Space Zoom is using a fleck of skulduggery . It turns out that when take pictures of the moon , Samsung ’s AI - based Scene Optimizer does a whole lot of heavy lifting to make it bet like the moon was photographed with a in high spirits - resolution telescope rather than a smartphone . So when someone takes a shot of the moonshine — in the sky or on a computer blind as in the Reddit post — Samsung ’s computational engine takes over and clears up the Crater and contours that the camera had missed .

Ina accompany - up post , they prove beyond much doubt that Samsung is indeed add together “ moon ” imagination to pic to make the dig clearer . As they excuse , “ The estimator sight module / AI greet the moon , you take the painting , and at this stage , a neuronal meshwork trained on countless moonshine images make full in the details that were not uncommitted optically . ” That ’s a bit more “ fake ” than Samsung lets on , but it ’s still very much to be expected .

Even without the investigatory study , It should be somewhat obvious that the S23 ca n’t by nature take vindicated shot of the moon . While Samsung says Space Zoomed snap using the S23 Ultra are “ subject of capturing images from an unbelievable 330 feet away , ” the moon is about 234,000 Swedish mile off or roughly 1,261,392,000 feet away . It ’s also a after part the sizing of the earth . Smartphones have no trouble taking clear photos of skyscrapers that are more than 330 invertebrate foot aside , after all .

Of naturally , the moon ’s distance does n’t tell the whole story . The moon is fundamentally a light source set against a dark background , so the television camera needs a bit of help to capture a clear image . Here ’s howSamsung explicate it : “ When you ’re taking a pic of the moon , your Galaxy gimmick ’s television camera system will rule this deep learning - based AI engineering , as well as multi - frame processing in decree to further enhance details . take on to get wind more about the multiple steps , appendage , and technologies that go into delivering high - quality ikon of the moon . ”

It ’s not all that dissimilar from features likePortrait Mode , Portrait Lighting , Night Mode , Magic Eraser , or Face Unblur . It ’s all using computational awareness to contribute , adjust , and edit things that are n’t there . In the case of the moonshine , it ’s easy for Samsung ’s AI to make it seem like the phone is lease incredible pic because Samsung ’s AI knows what the moon look like . It ’s the same reason why the sky sometimes seem too blue or the grass too immature . The pic locomotive is applying what it knows to what it sees to mimic a higher - end television camera and compensate for normal smartphone defect .

The difference here is that , while it ’s vulgar for photograph - taking algorithms to segment an image into parts and utilize dissimilar adjustments and exposure controls to them , Samsung is also using a limited form of AI simulacrum genesis on the moonshine blend in item that were never in the photographic camera data point to get with – but you would n’t be intimate it , because the moon ’s item always front the same when take in from Earth .

Samsung enunciate the S23 Ultra ’s television camera uses Scene Optimizer ’s “ rich - learning - establish AI particular enhancement engine to effectively eliminate remaining dissonance and raise the range of a function contingent even further . ”

Samsung

What will Apple do?

Apple is to a great extent rumor to add a periscope zoom lens of the eye to theiPhone 15 Ultrafor the first fourth dimension this year , and this controversy will no doubt weigh into how it trail its AI . But you may be assured that the computational engine will do a middling amount of labored lifting behind the scenes as it does now .

That ’s what makes smartphone television camera so not bad . Unlike head - and - shoot camera , our smartphones have powerful brain that can help us take good photos and help bad exposure look better . It can make nighttime photos seem like they were pick out with good lighting and assume the bokeh effect of a camera with an ultra - fast aperture .

And it ’s what will permit Apple get incredible issue from 20X or 30X soar from a 6X optical photographic camera . Since Apple has thus far guide clear of astrophotography , I doubt it will go as far as try out mellow - resolution moon photos to help the iPhone 15 take clear shots , but you could be sure that its Photonic Engine will be hard at work clean up edges , preserving detail , and promote the potentiality of the telephoto camera . And based on what we get in the iPhone 14 Pro , the outcome will sure enough be salient .

Whether it ’s Samsung or Apple , computational photography has enable some of the biggest breakthroughs over the past several days and we ’ve only just scratched the aerofoil of what it can do . None of it is actually substantial . And if it was , we ’d all be a fortune less impressed with the photos we take with our smartphones .