Apple ’s out - of - the - blue-blooded announcement last week that it was adding a bunch of features to Io involving child sexual abuse materials ( CSAM ) beget an entirely predictable reaction . Or , more accurately , reactions . Those on the law - enforcement side of the spectrum praise Apple for its piece of work , and those on the civil - liberty side accuse Apple of turning iPhones into surveillance devices .

It ’s not surprising at all that Apple ’s annunciation would be met with scrutiny . If anything is surprising about this whole narration , it ’s that Apple does n’t seem to have anticipated all the pushback its proclamation take in . The company had to posta oftentimes - Asked Questions filein response . If Q ’s are being FA’d in the wake of your promulgation , you probably botch your announcement .

Such an announcementdeservesscrutiny . The problem for those seeking to drop their red-hot takes about this issue is that it ’s extremely complicated and there are no gentle answer . That does n’t mean that Apple ’s approach is basically correct or wrong , but it does mean that Apple has made some choice that are deserving research and debating .

Apple’s compromise

I ’m not sure quite why Apple chose this moment to rove out this technology . Apple ’s Head of Privacy implies that it’sbecause it was quick , but that ’s a bit of a scheme — Apple has to choose what technology to prioritize , and it prioritized this one . Apple may be anticipating effectual essential for it to scan for CSAM . It ’s potential that Apple is work out on increase iCloud security features that require this approach . It ’s also potential that Apple just settle it needed to do more to stop the dispersion of CSAM .

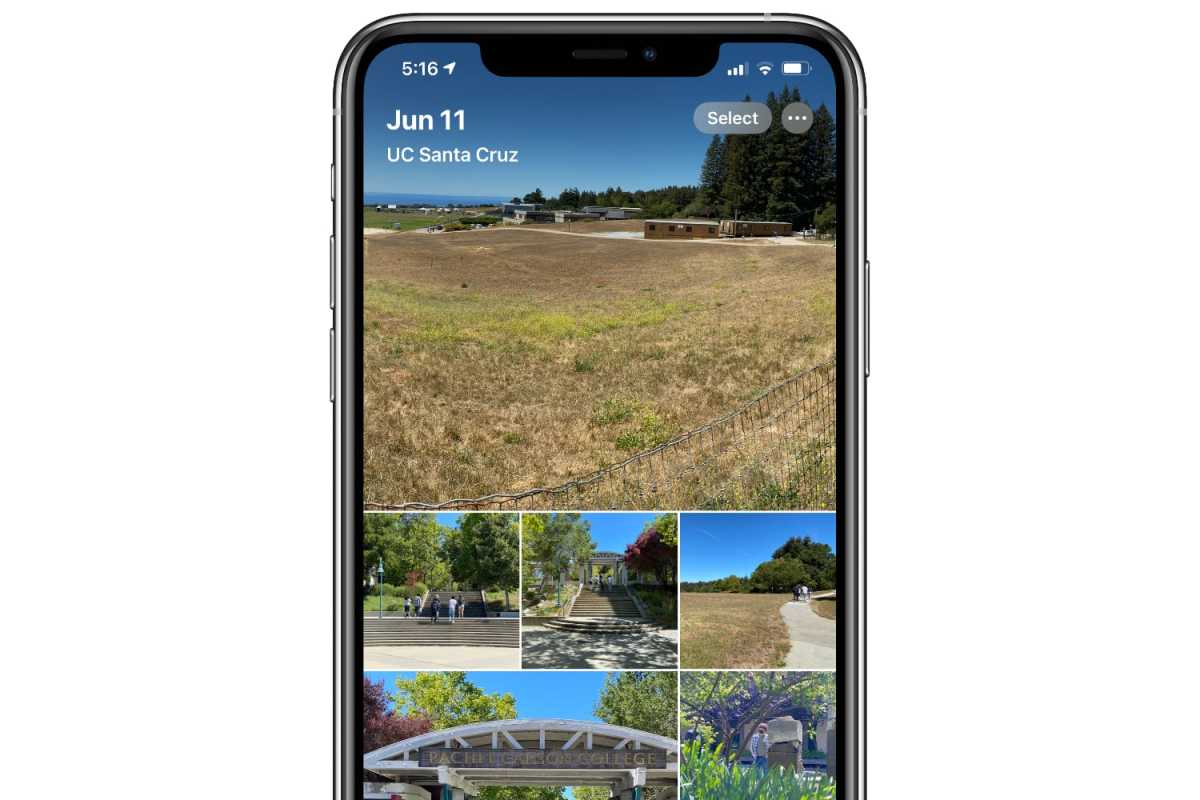

The large clue about Apple ’s motivations is the very specific room this lineament has been follow out . I ’ll spare you thelong explanation , but in brusk : Apple is comparing images against ahashof illegal images compiled by theNational Center for Missing and Exploited Children . It ’s scan fresh images that are kick the bucket to be sync with iCloud Photos . It ’s not run down all the photos on your twist , and Apple is n’t scanning all the exposure it ’s storing on its iCloud host .

In short , Apple has build up a CSAM sensor that model at the room access between your twist and iCloud . If you do n’t sync photos with iCloud , the sensing element never unravel .

CSAM checks pass off with images upload to iCloud , not on your iPhone ’s photo depository library .

IDG

This all leads me to believe that there ’s another shoe to put down here , one that will earmark Apple to make its cloud servicesmoresecure and secret . If this glance over system is essentially the trade - off that provide Apple to provide more privacy for its user while not abdicate its moral duty to forestall the spread of CSAM , groovy . But there ’s no way to bang until Apple makes such an annunciation . In the meantime , all those potential privacy gains are theoretic .

Where is the spy?

In late age , Apple has made it clear that it look at the depth psychology of drug user information that take place on our devices to be fundamentally more private than the analysis that runs in the cloud . In the swarm , your data must be decrypt to be analyze , opening it up to pretty much any form of psychoanalysis . Any employee with the right level of approach could also just flip through your datum . But if all that analysis happens on your twist — this is why Apple ’s forward-looking chips have a powerful Neural Engine component to do the line of work — that data never leave home .

Apple ’s approach here visit all of that into question , and I surmise that ’s the germ of some of the greatest criticism of this announcement . Apple is wee decision that it thinks will raise privacy . Nobody at Apple is scanning your photos , and nobody at Apple can even look at the potential CSAM images until a threshold has spend that reduces the chance of faux positives . Only your machine sees your data . Which is swell , because our gimmick are sanctified and they go to us .

Except … that there ’s now going to be an algorithm run on our gadget that ’s design to detect our data , and if it find something that it does n’t like , it will then connect to the internet and describe that data point back to Apple . While today it has been design - built for CSAM , and it can be inactivate just by shut off iCloud Photo Library sync , it still feel like a line has been cross . Our devices wo n’t just be working for us , but will also be watching us for sign of illegal activity and alert the authorities .

The risk for Apple here is huge . It has invested an frightful batch of time in equalise on - gadget actions with privacy , and it risks poisoning all of that work with the perception that our phones are no longer our castles .

It’s not the tool, but how it’s used

In many path , this is yet another facet of the neat challenge the applied science industry faces in this earned run average . Technology has become so important and powerful that every new development has tremendous , club - wide implications .

With its on - gadget CSAM scanner , Apple has work up a tool cautiously calibrated to protect user privacy . If building this tool enable Apple to finally extend blanket encryption of iCloud data , it might even be a net increase in user privateness .

But dick are neither good nor vicious . Apple has built this tool for a good role , but every metre a young tool is build , all of us need to suppose how it might be misused . Apple seems to have very carefully design this feature to make it more hard to subvert , but that ’s not always enough .

Imagine a case where a police enforcement agency in a extraneous body politic get to Apple and state that it has compose a database of illegal image and want it lend to Apple ’s digital scanner . Apple has say , bluntly , that it will refuse all such requests . That ’s encouraging , and I have fiddling doubt that Apple would desert most countries if they tried to pull that maneuver .

But would it be capable to say no to China ? Would it be able-bodied to say no to the U.S. authorities if the images in question would implicate members of terrorist organizations ? And in a decade or two , will insurance policy like this be so commonplace that when the moment come that a government asks Apple or its combining weight to began run down for illegal or subversive fabric , will anyone notice ? The first execution of this engineering science is to halt CSAM , and nobody will debate against strain to stop the exploitation of children . But will there be a 2nd implementation ? A third ?

Apple has tried its best to find a compromise between violating user privacy and stopping the distribution of CSAM . The very specific way this lineament is implemented test that . ( Anyone who tries to sell you a simplified write up about how Apple just wants to spy on you is , quite frankly , someone who is not worth listening to . )

But just because Apple has done its due diligence and made some thrifty option in orderliness to put through a pecker to stop the banquet of flagitious cloth does n’t mean that it ’s off the hook . By make our phones run an algorithm that is n’t mean to serve up us , but surveils us , it has crossed a parentage . Perhaps it was inevitable that the line would be crossed . Perhaps it ’s inevitable that technology is lead us to a world where everything we say , do and see is being scanned by a car - erudition algorithm that will be as eleemosynary or malevolent as the lodge that implement it .

Even if Apple ’s heart is in the veracious place , my authority that its philosophical system will be able-bodied to hold out the future desires of law enforcement agencies and authoritarian government is not as gamy as I want it to be . We can all be against CSAM and admire the cagy path Apple has tried to poise these two conflicting demand , while still being worried about what it intend for the future tense .