I have n’t written much about Vision Pro in the month since Apple take the wraps off its headset – er , excuse me , spacial computer . That ’s in part because I still have n’t tried it out for myself , but also because I ’ve been slowly put up the staggering amount of engineering science that the company show off with its up-to-the-minute machine .

In the interim , there ’s been plenteousness of theorize about Apple ’s ultimate goals with this mathematical product category , and whether a truly lightweight augment world gadget is even achievable with our current engineering .

As I ’ve spent time turn over the Vision Pro , I realized that Apple ’s story for the twist is mold as much by what itdidn’tshow us as by what it did . That goes for big category like fitness or gaming , which did n’t get much time in the Vision Pro declaration , but also for small , single features that already show up in other Apple product but are prominently absent from the Vision Pro , though they seem ideally suited to the future tense of this outer space .

Maps on the iPhone has a very good AR walking experience.

Two hands and a map

Apple demoed a figure of its survive applications that have been ported to the Vision Pro , but the single it exhibit off were by all odds of a specific hang . productiveness pecker like Keynote or Freeform , entertainment apps including Music or TV , communication prick such as FaceTime and Messages , and experiential apps like exposure and Mindfulness .

One puppet not present ( as far as we can separate ) on the Vision Pro ’s household CRT screen ? mathematical function .

Maps on the iPhone has a very good AR walking experience .

Maps on the iPhone has a very good AR walking experience.

Dan Moren

This strikes me as … notodd , incisively , for reasons I ’ll get into , but interesting . Because Maps is one place where Apple has already developed a really expert augmented reality interface . Starting in iOS 15 , the company roll out AR - free-base walk directions , which let you carry up your iPhone and see elephantine labels overlaid on a camera view . If you ’ve miss it , that ’s no surprisal : it was first available in only a few city but has quietly expanded in the last couple of year to more than 80 part worldwide .

I only ended up try it out late , and I was impressed with its utility , but bother by receive to take the air around take hold up my iPhone . That made me even more sealed that this feature would seem utterly at home on a pair of augmented reality glasses . But it looks like nowhere to be launch on the Vision Pro .

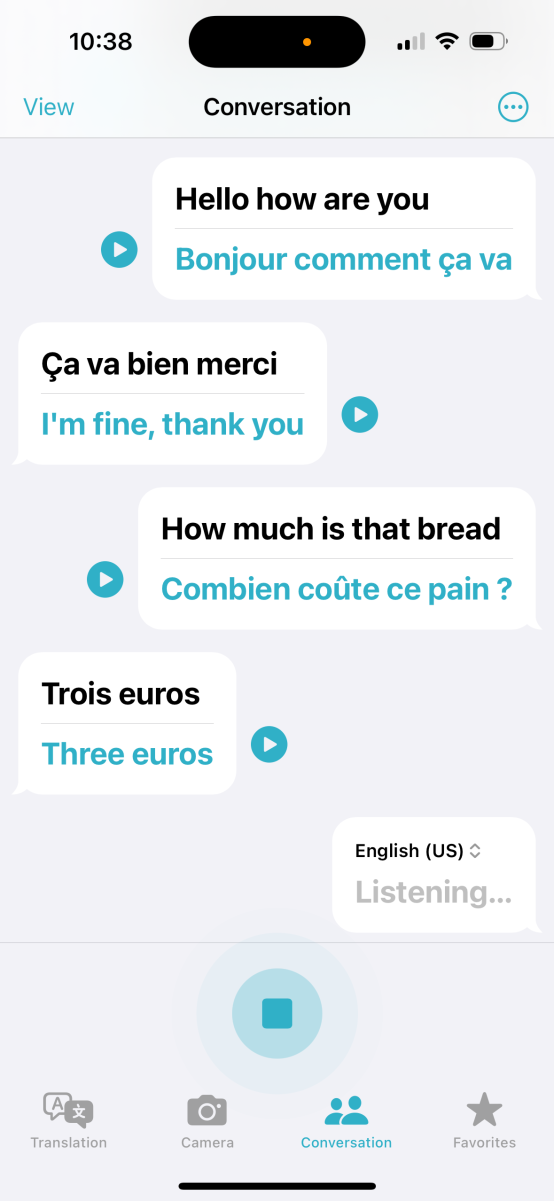

The iPhone’s Translate app would be an ideal feature for the Vision Pro.

Lost in translation

Any science - fabrication fan has at some point or another coveted Star Trek ’s universal transcriber orThe Hitchhiker ’s Guide to the Galaxy’sbabelfish : the invisible applied science that lets you instantly sympathise and be realise by anybody else . ( Not to mention iron out out a mint of pestiferous game problems . )

Apple ’s been gradually beefing up its own translation engineering over the past several years , with the addition of the Translate app and a systemwide version serving . While perhaps not as broad as Google ’s own offer , Apple ’s translation package has been easy catching up , even adding a conversation mode that can mechanically detect hoi polloi speaking in multiple speech , render their words , and play them back in audio recording .

The iPhone ’s Translate app would be an ideal feature film for the Vision Pro .

Visual Lookup on a headsetseems like an ideal match.

This is a killer app for travelling , and yet another lineament that would seem right at home on an augment reality equipment . conceive of being able to straight off have a translation verbalize into your capitulum , or displayed on a screen in front of your eyes . Several years ago , I was on an extended stay in India and , when the gentle wind conditioner in my now - married woman ’s flat break , I had to call her and have one of her cobalt - workers translate for the fix valet , who spoke only Hindi . ( To be fair , I did hear to use Google ’s like conversation mode at the time , but it ended up being rather clumsy . )

And it ’s not only for conversations either . Apple ’s systemwide transformation model already declare oneself the power to render textual matter in a photograph and the Translate app itself has a bouncy camera vista , meaning that you could even browse through a store while afield and have items you see around for you translated on the fly .

Don’t look up

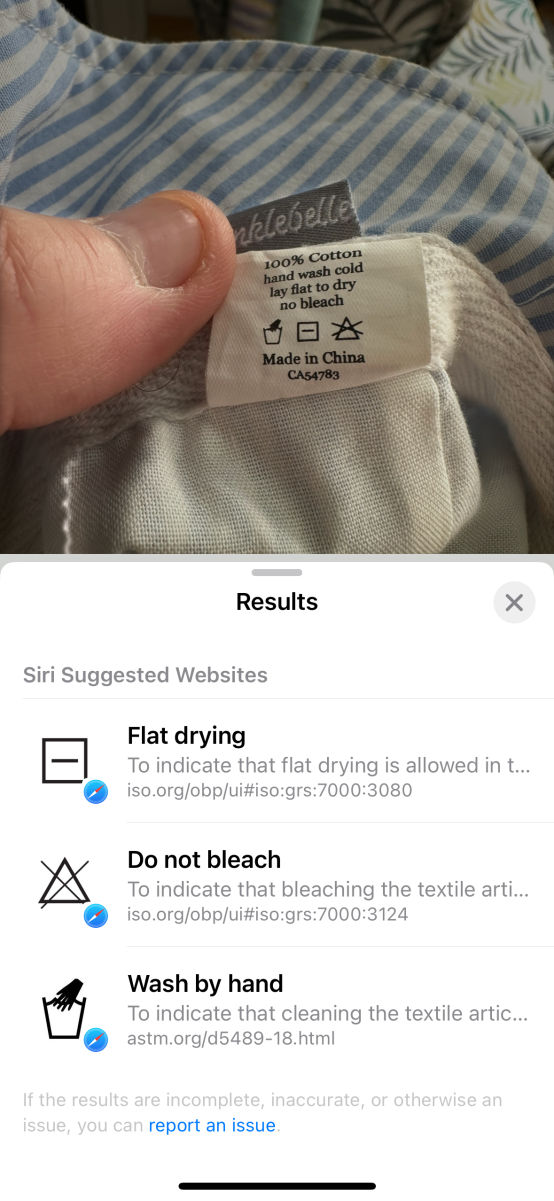

One of the most judgement - blowing features that Apple has developed in recent age is Visual Lookup . When first introduced , it leveraged auto learning to identify a few class of items see in photos – including flower , dog , landmarks – with somewhat miscellaneous termination . But in iOS 17 , Apple has ameliorate this feature immensely , both in terms of dependableness and by contribute other category , such as wait up recipes from a picture of a repast or identifying and interpreting those stupefy laundry symbolic representation .

This , to me , is the tangible dream of augmented reality . To be capable to look at something and instantly rive up information about it is a bit like take an annotated guide to the whole macrocosm . Moreover , it ’s another feature that meshes perfectly with the idea of a lightweight AR wearable machine : rather than looking at , say , a oeuvre of nontextual matter , and then burying your nose in your phone to take more , you could alternatively see the detail justly in front of you , or could even have interesting things overlaid or engage with the actual artwork itself .

Visual Lookup on a headset

Apple did n’t show off any Visual Lookup feature in its introduction to Vision Pro , but this is one shoes where there may be another brake shoe to drop . MacRumors ’s Steve Moser intrude around in the Vision Pro software system exploitation outfit and found notice of a feature film foretell Visual Search , which sounds as though it would do a lot of the same things , experience information from the world around you and augmenting it with additional details .

Vision of the future

On the nerve of it , the skip of all of these features makes sense with the story Apple tell about where and when you ’re potential to habituate the Vision Pro . Mapping , translation , and optic lookup are the kind of features that are most useful when you ’re out and about , but the Vision Pro is still fundamentally a machine that you goto , not one that you take with you .

Calling the Vision Pro a spacial computer might be a bombilation - desirable way of avoiding terms like “ headset ” or “ metaverse , ” but it also conveys something intrinsic about the production : it really is the mixed realism parallel of acomputer — and , even there , almost more of a desktop than a laptop computer , given that it seems to be something that you mostly use in a fixed position .

But just as laptop computer and pad and smartphones acquire the concept of a computing machine from something you sit at a desk to use to something that you put in a bag or your pouch , it seems obvious that the puck that Apple is skating towards is the Vision Pro ’s combining weight of a MacBook or iPhone . Hopefully , it wo n’t take as long for Apple to get there as it did for the smartphone to develop from the desktop computer but do n’t be surprised if what we ultimately get is just as different from the Vision Pro as the iPhone was from the original Mac .